Authors: Fadi Almachraki & Morgan Harrell

This post describes how system-driven thinking can be used to develop effective machine learning and artificial intelligence (AI) methods using complex clinical data to improve patient care.

The US healthcare system is home to rising levels of complexity, with clinical challenges, operational inefficiencies and myriad onerous problems. Machine learning and artificial intelligence (AI) are emerging as powerful tools that can leverage valuable clinical data to guide patients and providers and ameliorate the standard of care. Effective use of AI to improve patient care has to incorporate end-to-end solution implementation. In this review, we detail the fundamental thought process behind this type of end-to-end solution-building framework.

The healthcare industry is generating data in volumes that double every two years. There is a demand for efficient computational frameworks that synthesize healthcare data for production-ready applications to support patient care. These frameworks have the potential to drive substantial improvements in clinical outcomes. Reaching that potential will be a function of how well those computational frameworks fit within current clinical workflows and the degree to which they are designed with an end-to-end systems-oriented thinking. Here, we will highlight the importance of such system-oriented thinking by contrasting two hypothetical use cases for AI-backed model deployments at a hospital system. Then, we will summarize the crucial elements of a well-designed solution.

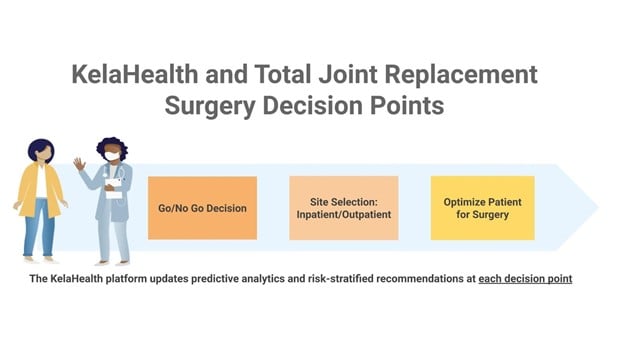

Consider Hospital A and Hospital B, both aiming to operationalize a machine learning model that predicts 30-day readmissions for patients undergoing orthopedic surgery.

Hospital A is driven by the constant pressure to innovate, the need to iterate quickly, and the allure of enhanced predictive modeling techniques for their cross-functional team of clinicians, data scientists and business partners. The team develops a project plan, partners with IT to collect the required data, and begins trying to build the best possible predictive model for readmissions. Two weeks into this project, the team is halfway through a project timeline that is headed towards failure in the likely case, and weeks of lost time in the best case scenario. Let’s contrast this with Hospital B’s experience before exploring why Hospital A is likely to fail.

Hospital B takes a different approach. The team begins by answering the following questions before even writing a single line of code:

-

Who will be the primary users of this model?

-

What specifically will the model output be? Is “readmissions” too broad of an area to try to tackle? Should we target specific readmissions for a more nuanced slice of the patient population?

-

What will the final user see when the model is run? Will the output be a number or a category and what is the universe of possible outputs?

-

What can we ask users to do for each potential model output? And as a result what interventions do we plan to attach to each possible model output for the clinician to follow?

-

Can we design a set of interventions that:

-

Fit seamlessly within current clinician workflow?

-

Require minimal additional clinician capacity and funding?

-

Will effectively lower readmission rates in the medium to long term?

-

-

What is the appropriate lower bound for model performance for deployment?

-

How can we make sure clinicians trust the model output/recommendations?

-

Is model performance more important than model interpretability?

-

What are the clinical, financial, operational, compliance and reputational costs of making, and acting on, an incorrect prediction?

The differences in approaches between Hospital A and Hospital B are mostly a function of system-oriented, first principles thinking. Hospital A quickly pushed for a model and built a staircase to nowhere. Hospital B planned an end-to-end, systems-oriented process, which set them up for successful model adoption and real clinical impact. And while the approach Hospital B utilized can be easily mistaken for the eye-rolling, innovation-stifling bureaucratic red tape one often encounters in healthcare’s heavily regulated data ecosystems, one must consider the following facts:

-

Answering all the questions outlined as part of Hospital B’s approach can be done in a structured 30-minute meeting that brings together the right stakeholders. In this case, the stakeholders include clinicians, primary and secondary users, data scientists, and project/product managers.

-

The answers to the questions above have to come before starting to build the model specifically because said answers impact crucial modeling and design decisions that data scientists and machine learning engineers can use as requirements to build a useful clinical tool. An example of this is the answer to the question about the relative importance of model performance to model interpretability. An entire class of machine learning models and techniques might be off-limits if interpretability is in fact more important than performance, depending on the data and specific use case.

In short, building an effective clinical tool that is backed by the latest innovations in machine learning and data science demands foresight, patience and second-order thinking usually embedded within system-oriented, end-to-end solution development.